IBM began collaborating with NASA six months ago on creating an AI foundation model designed to accelerate satellite image analysis and foster scientific discovery. Now publically available through Hugging Face, this geospatial foundation model represents one of the largest geospatial foundation models ever hosted on this platform and represents their first open-source foundation model collaboration project with NASA.

What is a foundation model?

Foundation models are artificial intelligence algorithms pre-trained on large, diverse data sets that serve as building blocks for other AI models to use, speeding up their creation processes.

More: Dupont Museum | Washington DC Local T.V Station | Survey Monkey Quiz Mode | New Politics Academy | NASA Langley Visitor Center | Minnesota Museum

Foundation models are already revolutionizing how we develop AI applications. One notable application of this concept is ChatGPT, an AI system which simplifies chatbot development via GPT-3.5 foundation models. By tweaking and adding more chatbot-specific data into these foundation models, developers can craft more efficient systems which perform well for specific scenarios.

GPT-3.5 can be used to train chatbots that help people track their health, or detect suspicious text in documents and emails using foundation models as pre-training and then apply this knowledge in new situations, thus avoiding false positives and speeding up manual review processes.

Foundation models not only facilitate the creation of AI models more quickly and accurately; they also improve accuracy and speed. Google’s GraphCast emulator was able to predict Hurricane Lee’s path up to nine days early compared with traditional forecasting systems.

But foundation models do present unique challenges; for instance they require access to large volumes of data and can be vulnerable to security attacks as well as being energy intensive, making cloud deployment impossible.

IBM and NASA are joining forces to solve these challenges. Recently, they announced their partnership to develop a geospatial foundation model which can make weather and climate applications faster and more accurate – this system will be created with assistance from NASA domain experts and then open-sourced.

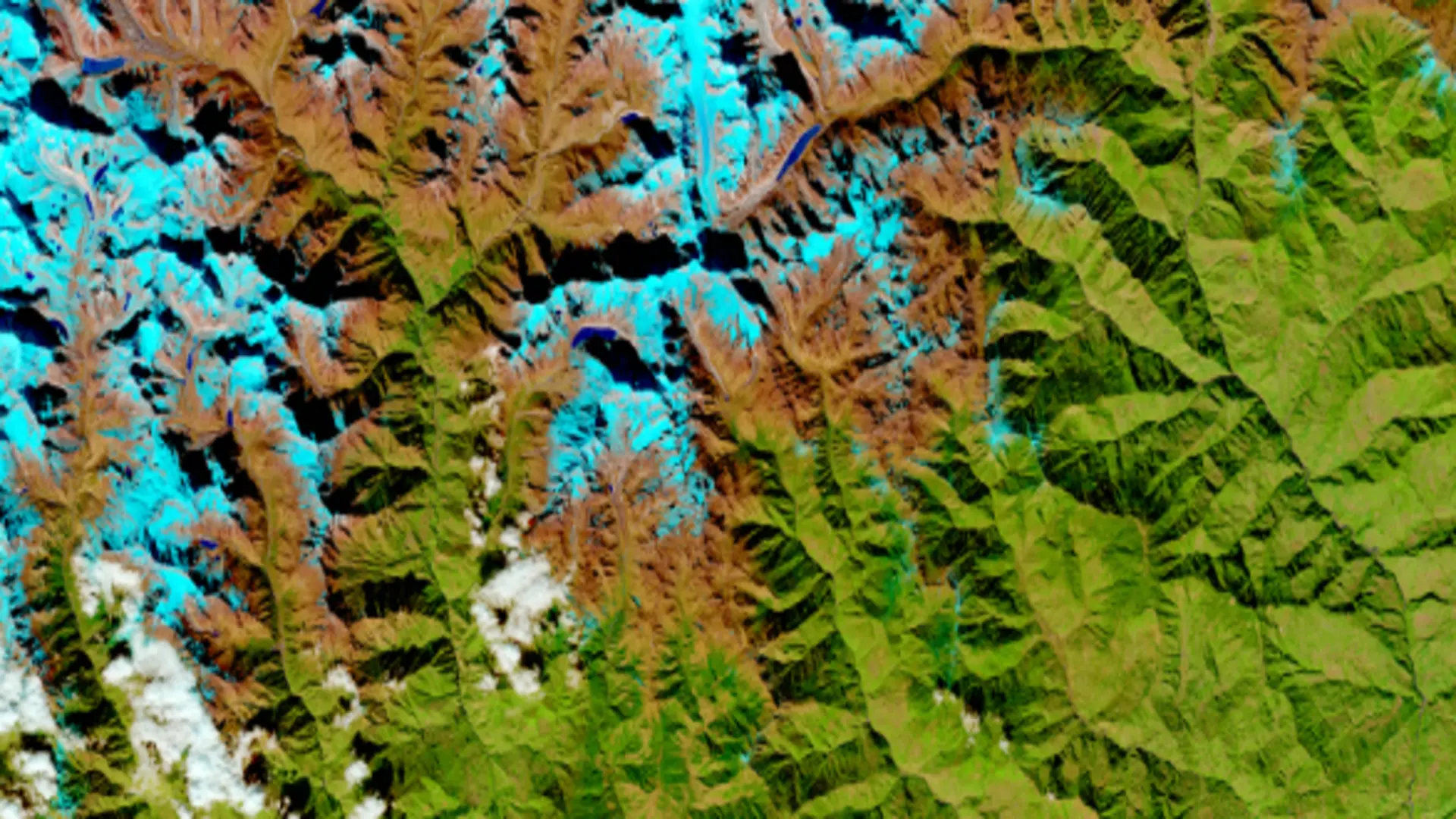

The IBM/NASA team is using the Transformer architecture to construct a foundation model for geospatial data. A Transformer is an AI architecture which can compress large amounts of raw data into compressed representations that capture their basic structures – acting as scaffolding to train other AI algorithms in specific tasks like processing satellite imagery to identify features like flooding, wildfires and drought areas as well as tracking deforestation /reforestation cycles.

This foundation model will eventually help track deforestation/reforestation dynamics more closely as well.

How does it work?

NASA’s vast library of satellite imagery can be an incredible source of knowledge; however, its sheer volume can be daunting to navigate. To make this data easier to comprehend and analyse, IBM and NASA collaborated a year ago on creating a geospatial foundation model.

Hugging Face has just made available an innovative model called HLS Geospatial Flow Machine (GHFM), which quickly turns NASA satellite observations into maps depicting natural disasters and environmental changes.

The GHFM, trained on Harmonized Landsat Sentinel-2 data collected across the continental US over one year and optimized to map flood and fire scars, can process this type of data four times faster than state-of-the-art deep learning models with half as many labeled samples.

This geospatial version of generative adversarial network (GAN) can be trained on image datasets from all over the world to distinguish images by their features. Researchers utilized an approach similar to other GANs: they provided it with random image generation followed by feedback about how each was made; in turn, the model then learned how to generate better ones in future by looking back over its past successes.

Priya Nagpurkar, who oversees IBM’s hybrid cloud platform and developer productivity research strategy, credits this approach with speeding up AI model creation. Foundation models are created using unlabeled data sets in order to train more focused AI systems,” Nagpurkar explained.

IBM and NASA hope that making the Global Health Forecast Model freely available will encourage Earth science research globally. Currently they are working closely with Clark University researchers, UAE’s Mohamed Bin Zayed University of Artificial Intelligence and Kenya’s Government to tailor it specifically for their applications – for instance mapping urban heat islands or tracking reforestation in Kenya for instance.

In future versions, monitoring greenhouse gasses or forecasting extreme weather events is also planned as applications.

What is the future of foundation models?

Foundation models are revolutionizing natural language processing (NLP), enabling developers to train one model for text and then quickly customize it for different tasks without needing to retrain it entirely.

This is a significant departure from prior times when NLP was tailored specifically for each task; multimodal AI is now also emerging with foundation models being applied across speech, vision and language channels to understand complex content more effectively.

NLP could use this strategy to tackle an array of tasks and help users from diverse backgrounds access information more quickly and efficiently. Foundation models form the backbone of this approach, and as they continue to develop we expect their performance on various tasks to increase as they evolve into new architectures, training techniques, or data augmentation strategies that enable even more powerful AI applications.

IBM and NASA have joined forces to develop an artificial intelligence foundation model for geospatial intelligence. Their aim is to make it easier for scientists, businesses, and individuals to build AI applications tailored towards specific questions or tasks.

Their model will automatically analyze petabytes of geospatial data in search of any hidden structures; leaving researchers more time and focus to focus on answering their specific queries or crafting customized models according to individual needs.

The team has pre-trained its foundation model with human-labeled data for mapping floods and wildfires from satellite images, but it can also be tailored for other uses, including deforestation tracking, monitoring mining sites and crop yield prediction. This partnership with NASA forms part of its Open-Source Science Initiative push towards making data, code and AI models freely available.

With the foundation model, geospatial data will become more accessible to the public, facilitating scientific discovery and innovation. It also empowers enterprises to develop strategies for mitigating and adapting to climate change.

IBM and NASA are working with other partners on using the model for climate risk evaluation purposes such as surface water flooding assessment and tree planting areas evaluation as well as planning communities’ responses to its effects on their economies and infrastructure.

How can foundation models help us?

Foundation models fed enough raw data can piece together the structure of complex systems like code, natural language or molecules. With this general knowledge available to them, these foundation models can then perform tasks without needing human guidance–this process is known as deep learning.

See More: The Museum of Discovery | The Colorado History | Museum Northwest | Flagstaff Museum | Terry Bryant Accident Injury Law | Nevada Museum | Explore the Earth Surface

IBM and NASA collaborated to build a geospatial foundation model, so anyone could easily access and analyze satellite imagery. This can have enormous benefits; early warning of impending natural disasters can save lives as well as money for logistics companies, businesses and government agencies alike.

An AI model capable of quickly interpreting geospatial data is a game-changer. These AI models can help us detect patterns which are difficult to spot with human eyes and understand why certain phenomena, like earthquakes and tsunamis occur.

The model relies on data from Harmonized Landsat Sentinel-2 dataset to record land-use changes across the globe, such as droughts and wildfires, tracking climate change impacts and evaluating habitats for biodiversity purposes.

IBM and NASA are working together to make it easier for developers to build AI applications capable of analyzing satellite data at scale, through developing foundation models pre-trained on HLS dataset and then tested against MERRA-2 dataset that includes high-quality observations from fixed weather stations, floating weather balloons and planet-orbiting satellites, according to Ganti.

Training a typical foundation model currently takes tens of thousands of GPU hours, creating an enormous barrier to entry for smaller companies with limited computing resources. A pre-trained AI model that could perform this task quickly would be an amazing resource and game-changer.

Foundation models boast another key advantage over state-of-the-art deep learning models: their ability to be updated automatically without impacting application performance. This is possible thanks to them being trained on unlabeled datasets in an unsupervised fashion; one reason they outshone state-of-the-art deep learning models, according to Ganti.

Frequently Asked Questions

What is the foundation model’s function?

Foundation models such as the IBM/NASA geospatial model were built with the Transformer architecture that compresses huge amounts of datasets into structured versions. These models can learn fundamental structures from the data, and can be further trained to perform specific tasks, such as studying satellite images to find characteristics like wildfires or flooding.

What’s the significance of the foundation models?

Foundation models have revolutionized a variety of areas, such as the field of natural technology for processing languages (NLP) as well as geospatial analytics. They facilitate the faster and more precise creation of AI applications by supplying an infrastructure which can be tailored to various tasks with no massive reconfiguration.

What can foundation models do for us?

Foundation models, such as those created in collaboration with IBM and NASA are used in a variety of ways that include the early warning of disasters and their effects, observing the impacts of climate change and the evaluation of biodiversity. They make use of huge datasets to reveal the patterns, and reveal insights which might be difficult for human beings to recognize, which ultimately assists in the process of scientific discovery and risk evaluation.

What are the problems associated to foundational models?

While foundation models provide many advantages, they pose difficulties. They require access to huge amounts of information, they are prone to security threats and may consume a lot of energy and make cloud deployment difficult. But, there are efforts underway to tackle these issues by collaborating and advancing technology.

What is the state of foundation models?

Foundation models are continually evolving, bringing improvements to structures, training techniques and data enhancement strategies. The evolution of foundation models allows foundation models to tackle increasingly difficult tasks across a variety of areas, enabling the development of new technologies and increasing their value across a variety of applications.

How can developers make use of foundation models?

Developers can build on base models to develop AI applications that are tailored to specific requirements or queries. These models are an initial point of reference, allowing developers to focus on improving and tailoring the AI application to their specific application. Furthermore, initiatives such as the IBM/NASA collaboration are designed to make the foundation models and associated data more accessible to the research community, encouraging collaboration and development.